That’s something that we were concerned about that, too. Today, we'll show you how we check testing quality and why we trust our testers' results.

Challenge

Why testing quality matters

We always collect and analyze our clients’ feedback after testing.

For example, after testing a music app, we learned two things from the reports:

- Some cases were tested on the wrong version of the app, which the client had submitted for testing.

- Some cases were marked "Passed" when they should have been "blocked", because not all the steps could be completed.

We identified 3 tasks to make testing quality more predictable:

- Solve the problem of regression testing on incorrect app versions.

- Check the assumption that some testers are cheaters who assign statuses to test cases without actually checking them.

- If we confirm this theory, stop testers from cheating.

Solution

How we implemented a check for the right environment

We added launch codes so that app versions correspond to the client's testing task.

A launch code is the personal build code of an app. Testers have to enter it before they can access the test case. If they don’t enter the code, they can’t start testing.

This is what the interface looks like for the tester. They need to enter the code to start the task

Codes for testers can vary. We showed the launch code on the About the app screen

Our code implementation process went like this:

- We generated launch codes.

- We corrected the process of rolling out regression testing to testers.

- We gave testers step-by-step instructions on how to enter the codes.

This check eliminated the issue of testing the wrong app version.

Sniffing out cheaters

Cheaters try to make money by abusing our system and violating the platform’s policies and tester code of conduct. We use honeypots to identify and remove these testers.

Honeypots are bait for hackers. Instead of attacking an entire program, hackers just go for the bait, revealing how they work.

We didn't have any hackers, but we did have testers who marked cases as "Passed" without checking them. We figured we could use honeypots to catch them, too.

We created a version of an app with bugs and sent it out for testing. At the same time, we hid any relationship between test cases and bugs. This helped us find testers who didn't look for errors and only pretended to finish their cases. In this version, we added a honeypot: a case that shouldn’t receive the "Passed" status. If a tester marked this status, they were automatically blocked.

Results

- We are now absolutely certain that the tester has installed and is testing the correct version of the product. Since cases can now only be accessed with a code, the number of bugs reported due to the wrong environment has decreased.

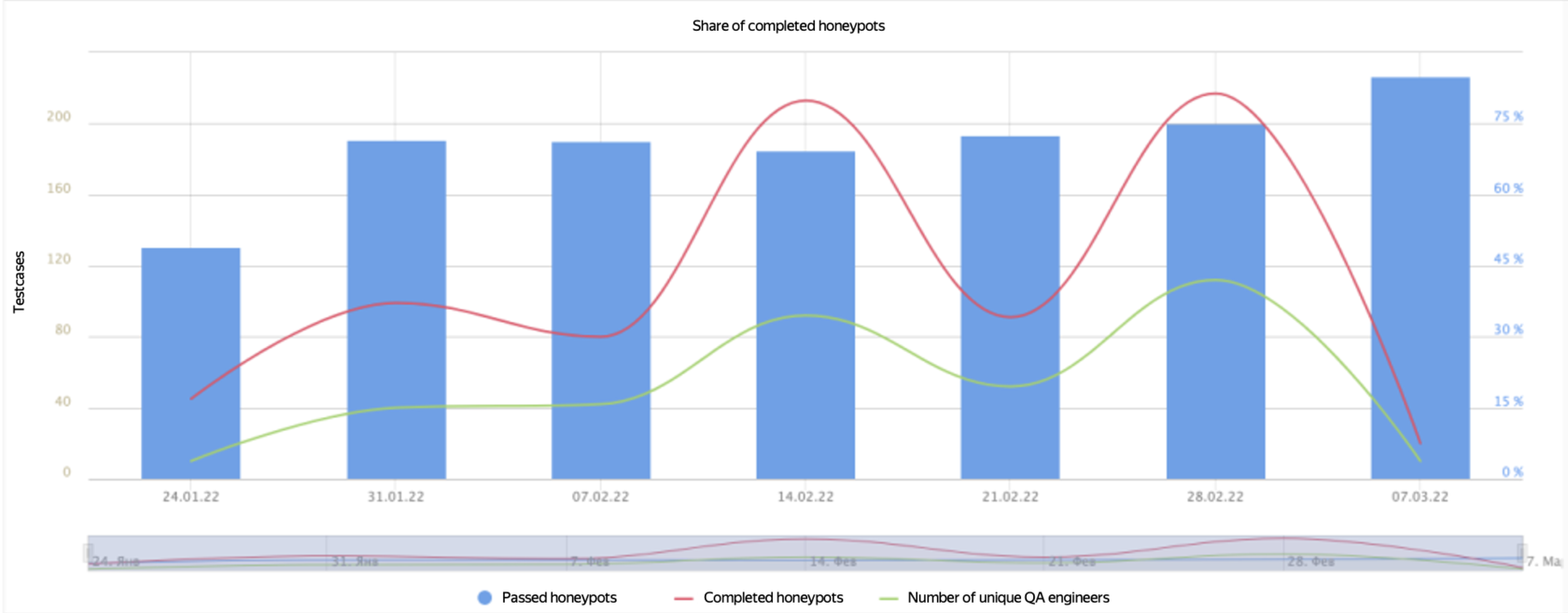

- There is three times less cheating. When we first started using honeypots and didn't block anyone, testers gave 6 out of 10 honeypots the "Passed" status, but now it's only 2.5 out of 10. Quality has improved.

On the graph, the red line shows how many honeypots testers passed correctly (without cheating), and the green line indicates the number of unique testers